An Observation

MCP has quickly become the standard for AI applications in just a few months. Meanwhile, new proposals like A2A, ACP, and AG-UI have emerged. How to understand these protocols? What are valid and what are still missing?

A few days ago, I saw a tech guru liken MCP to a Monad on his blog. That analogy inspired me: perhaps many concepts from functional programming fit surprisingly well with agent universe.

The Reincarnation of Programming Paradigms

Let’s first revisit Karpathy’s concept of Software 3.0. First the “LLM OS” he invented— TBH what he’s describing looks less like an OS and more like a computer. The LLM occupies the CPU’s role, and training an LLM feels more akin to semiconductor manufacturing than software engineering (Karpathy himself hinted at this as well). The chatbot can basically be seen as the command-line interface, and we are working with bare metal rather than a proper “OS” actually. MCP being compared to USB—a hardware abstraction layer—is no coincidence, although this time the programming paradigm has fundamentally shifted.

Karpathy also mentioned “generate-verify” loop, strongly reminiscent of declarative programming—especially when looking at interactions between users and agents.

We won’t scare our non-engineer readers off by pasting code, but here’s a brief background:

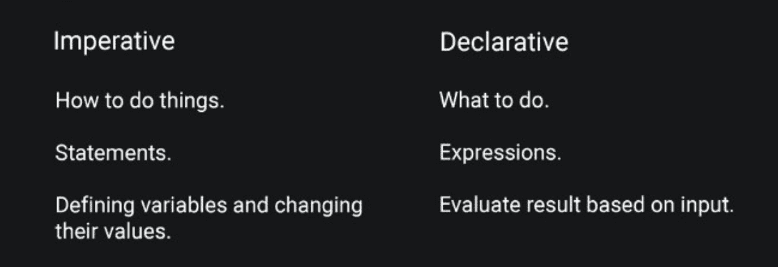

The industry has been dominated by imperative programming for decades—languages like C/C++, Java, and mainstream of Python explicitly instruct computers step-by-step on what to do.

Declarative programming, on the other hand, is about defining concepts and their relationships, combining them into higher-level abstractions, and succinctly describing the intended outcomes (sound familiar to writing prompts?). Functional programming languages such as Lisp and Haskell fall predominantly into this declarative camp.

Declarative programming is elegant, but historically not very efficient. The machine must do the heavy lifting to interpret these abstract definitions, and there are additional constraints—like functions needing to avoid side-effects (such as state mutations). To maintain elegance while still being practical, Haskell introduced the concept of Monads.

Monad: A Missing Abstraction

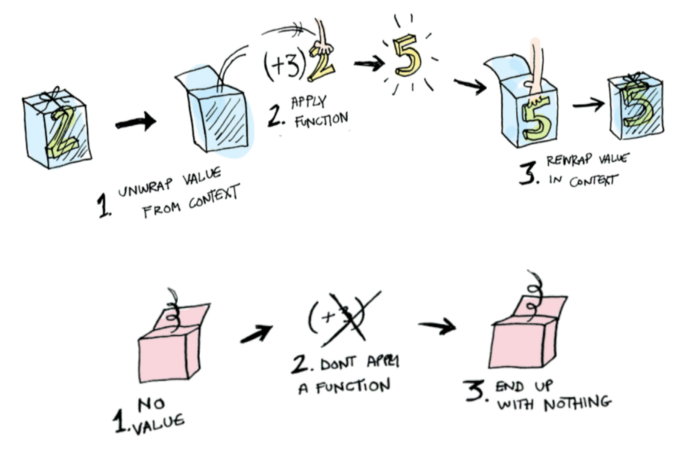

The Monad abstraction addresses this issue: how do we elegantly abstract away all the messy details—such as error handling, input/output interactions, and state management—so the core logic remains clean, composable, and readable?

To solve this, a Monad defines a composable, context-aware container for computations.

Formally, a Monad consists of three parts:

- A way to wrap values (type).

- A way to chain computations (bind).

- A way to inject ordinary values into this container (return)

Key features of Monads:

- Encapsulation: Computations and their context are packaged together.

- Composability: Multiple Monads can seamlessly chain together through bind (>>=).

- Associativity: The outcome remains unaffected by the order of chained computations—similar to how addition and multiplication work.

- Context Auto-propagation: State, exceptions, and environments flow automatically between steps.

Though abstract, the Monad concept has influenced numerous modern technologies, from JavaScript’s Promise pattern and async programming across various languages, to C#‘s LINQ, Rust’s Option, ReactiveX’s Observable, and even Kubernetes’ declarative configurations. Wherever you encounter chained APIs, encapsulated contexts, or composable semantics, there’s likely a Monad soul in it.

MCP is the Monad for Agent — Almost

When viewing MCP through the lens of Monads, we see what MCP got right—and also what’s still missing:

Correct direction:

- Unified interface abstraction (analogous to Monad’s type class).

- Capability encapsulation (hiding implementation details).

- Basic combinational support (tool chaining).

Key missing elements:

- Lack of a standardized bind operation.

- Absence of fundamental primitives like return/pure.

- Insufficient support for multi-agent collaboration.

These gaps help explain the emergence of new protocols:

A2A (Agent-to-Agent)

- Enables higher-order composition between agents.

- Allows agents to invoke other agents recursively.

- Conceptually resembles Monad Transformers.

ACP (Agent Connection Protocol)

- Standardizes the connection between capabilities.

- Provides bind-like semantics.

- Automatically adapts different capabilities to interact smoothly.

AG-UI (Agent-GUI Protocol)

- Manages context flow during human-agent interactions.

- Retains state across multi-turn conversations.

- Analogous to the Reader Monad for environmental propagation.

These protocols are each filling critical gaps left by MCP when viewed from a Monad perspective.

Claude Code’s recently introduced hooks, not really identical with protocols, yet they share a similar spirit: small computational units with clearly defined input/output contracts.

Why This Observation Matters

There’s nothing genuinely new under the sun. The problems Monads aimed to address decades ago likely are/will be the challenges resurfacing in agent application architectures.

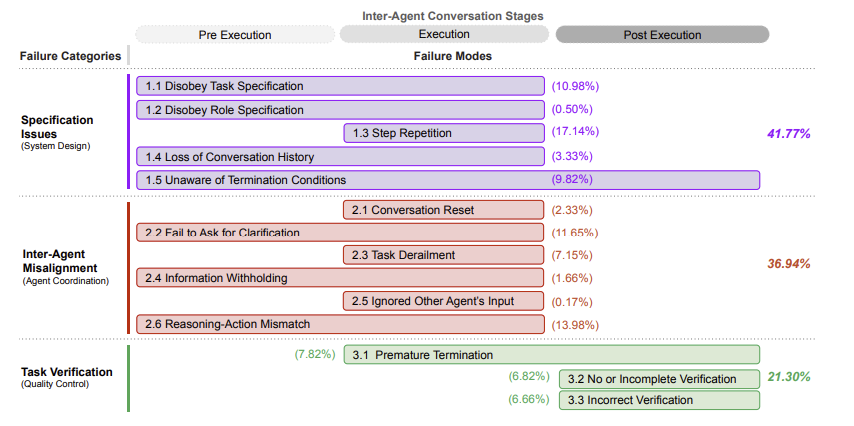

Having said that, agent computing—especially multi-agent systems (MAS)—introduces uncertainty and additional complexity as abstraction levels rise. The Cognition team previously published the article Why Multi-Agent Systems Fail, proposing a taxonomy called MAST (Multi-Agent System Failure Taxonomy). They analyzed over 200 tasks across seven MAS frameworks, identifying 14 failure modes classified into three categories:

- Specification Issues (41.77%)

- Including flawed system designs, ambiguous task definitions, and poor dialogue management.

- Common failures: violating specifications, repeating steps unnecessarily, losing conversational history.

- Inter-Agent Misalignment (36.94%)

- Caused by poor communication, failed coordination, and conflicting agent behaviors.

- Common failures: dialogue resets, failure to clarify tasks, deviation from tasks, withholding information.

- Task Verification Issues (21.30%)

- Poorly implemented validation processes failing to detect or correct errors.

- Common failures: premature termination, incorrect validation logic, overlooked errors.

The article emphasizes that most failures stemmed from inadequate system designs and agent coordination—not limitations of individual LLMs. Even adding a validation step only boosted overall success rates to about 33%. Clearly, systematic design of roles, verification procedures, and communication protocols is critical for robust MAS.

And let’s not forget: the pitfalls of traditional distributed computing won’t disappear simply because you are vibing.

Declarative programming and Monads offer powerful abstraction perspectives. But the future likely won’t rely on traditional Monads unchanged—rather, it might evolve into something like a “probabilistic Monad”? I don’t know yet…

I’m curious to hear your thoughts: what should the future of agent application architecture look like? What kind of protocols we still need?

P.S. Lisp was originally designed for ancient symbolic AI research, and now, through an interesting twist, seems Functional Programming is making a comeback to AI. Intriguing…

Thanks for actually reading the whole thing