Will all of the fud out there regarding the "popping of the AI bubble" and ORCL CDS spreads blowing out, I thought the below article was a concise, informed position from Gavin basically saying: "trust the process"

Link: https://x.com/GavinSBaker/status/1991248768654803337

A few somewhat obvious takeaways:

1. If foundation models prove not to be a commodity, this clearly shifts balance of power towards US. Seems reasonable to assume internal capabilities at top labs surpass what is publicly available

2. Performance increases vs. open source likely to increase as Blackwell comes online. Chip bans will start to bite China OSS harder next year.

3. Pretty crazy the closed foundation model race is a four horse oligopoly and the only "pure play"(ish) publicly traded exposure available is $GOOG. Personally though, that is the name I would most like to own

4. Cost per token is the key output and $Goog is again the leader here given their substantial infra investments

Google has obviously run quite a bit recently, but seems like a structural shift is clearly underway in terms of market share. Will likely have some interim pull backs but seems like the most obvious long-term hold of the Mag 7.

The $GOOG is an "AI loser" crowd looking increasingly foolish.

Great presentation from Nathan Lambert at "The Curve" on the state of open source models.

Link: https://docs.google.com/presentation/d/1f1Et0Mz8zb1yVCnCgdYSy4tAa0Kv_gKT4wPEg1XPdUA/edit?slide=id.g38edb366806_0_6#slide=id.g38edb366806_0_6

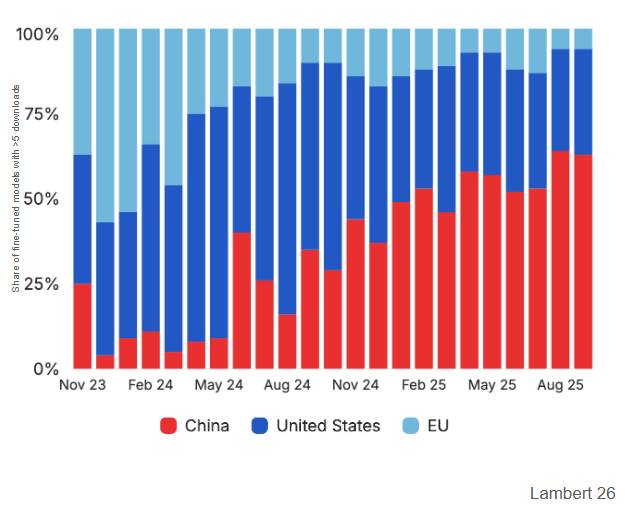

The rise of the Chinese ecosystem has been blistering, starting from negligible downloads at the start of 2025 to well over 50% share in September of this same years, compounding across ~20 different organizations.

Qwen's ecosystem in particular has been on a tear, emerging as the starting point of choice for AI developers. It's interesting to think through the incentives of Chinese Labs and whether they will continue to open source.

- Continuing to lag the US closed source models, open source is a great way to close the gap

- Leading in open source is fantastic marketing to continue to attract top AI talent

- The willingness to pay for software / AI in China is de minimis, leading large ecosystems to monetize gains AI capabilities through adjacent products

- The Chinese government is highly focused on AI diffusion through the rest of society with its "AI+" initiative; ubiquitous, free intelligence is aligned with that vision

- Culturally, DeepSeek has set the tone for open sourcing SOTA level models which will be a difficult precedent to challenge and remain competitive.

Overall, my read is that China seems likely to continue to open source based on the above incentives. If the US does not want Chinese models to become the starting point for every developer looking to tune or post-train an AI for their product, they have some work to do...

PSA: Delphi is co-hosting a summit on Open Source AI in San Francisco on Oct. 24

This is a one-day, single-track event for founders and engineers focused on the frontier of open source and distributed AI, part of the Linux Foundation's Open Source AI Week.

We are bringing together senior researchers and leaders from OpenAI, Google DeepMind, Meta, NVIDIA, alongside the founders and maintainers of top open-source AI projects like Letta, Cline, and Qdrant. They will be joined by leading research labs in open and distributed AI.

The agenda is technical, with no marketing pitches. You will hear directly from the people building foundational models and core infrastructure. The goal is to move beyond hype and focus on making AI universally accessible, provably fair, impartial and beneficial for all.

This is an opportunity to connect with the maintainers of leading open-source projects and the founders of emerging AI-native companies. Should be an awesome group.

***Tickets can be brought here***

If keen, please feel free to dm me on twitter @ponderingdurian with a bit of your background / experience in AI and may be able to provide a discount to listed pricing :)

.png)